Or we could get ChatGPT using from langchain.chat_models import ChatOpenAI Let’s get an LLM such as GPT-3 using: from langchain import OpenAI We can now use a LLM to utilize the database data.

Search_docs = doc_db.similarity_search(query) We can now search for relevant documents in that database using the cosine similarity metric query = "What were the most important events for Google in 2021?" ) It is used to convert data into embedding vectors import pineconeįrom langchain.vectorstores import Pineconeįrom import OpenAIEmbeddings The default OpenAI embedding model used in Langchain is 'text-embedding-ada-002' ( OpenAI embedding models. We upload the data to the vector database. Let’s first write down our API keys import os I advise you to watch the following introductory video to get more familiar with it: LangChain provides a standard interface for Chains, as well as some common implementations of chains for ease of use.Ĭurrently, the API is not well documented and is disorganized, but if you are willing to dig into the source code, it is well worth the effort. Depending on the user’s input, the agent can decide which – if any – tool to call.Ĭhains: Using an LLM in isolation is fine for some simple applications, but many more complex ones require the chaining of LLMs, either with each other, or other experts. In these types of chains, there is an agent with access to a suite of tools. This module contains utility functions for working with documents and integration to different vector databases.Īgents: Some applications require not just a predetermined chain of calls to LLMs or other tools, but potentially to an unknown chain that depends on the user’s input.

Indexes: Indexes refer to ways to structure documents so that LLMs can best interact with them. Memory: This gives the LLMs access to the conversation history. It has API connections to ~40 public LLMs, chat and embedding models. Models: This module provides an abstraction layer to connect to most available third- party LLM APIs. It can adapt to different LLM types depending on the context window size and input variables used as context, such as conversation history, search results, previous answers, and more. Still not sure what the underlying reason was though.Prompts: This module allows you to build dynamic prompts using templates. I've removed a few detours I used in my original code, and now it works. So if you have any idea what that is about, please do let me know!ĮDIT: So, when I try this in a random notebook, it actually works fine. Sometimes the output is an empty 1x1 image, instead, which I also haven't found a reason for. all images in that list are of the last page

#PDF2IMAGE PYTHON PDF#

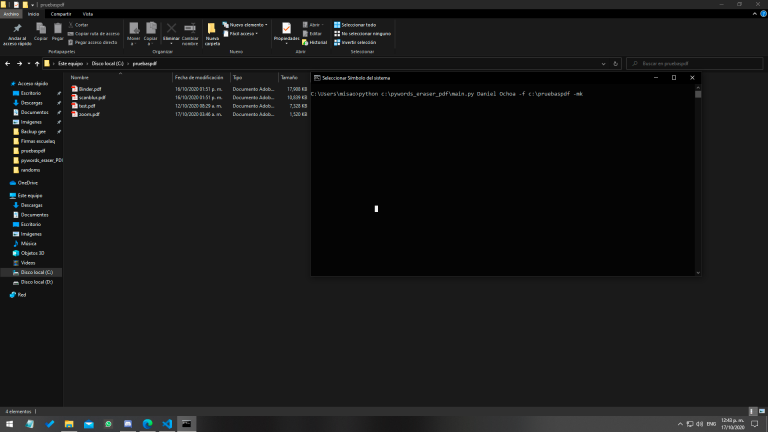

Print(f" Could not convert PDF pages to JPEG image due to error: \n ''") # Read PDF and convert pages to PPM image objects I've found some vague suggestion that the use_cropbox argument might be used, but modifying it has no effect. So when I use the pdf2image python import, and pass a multi page PDF into the convert_from_bytes()- or convert_from_path() method, the output array does contain multiple images - but all images are of the last PDF page (whereas I would've expected that each image represented one of the PDF pages).Īny idea on why this would occur? I can't find any solution to this online.

0 kommentar(er)

0 kommentar(er)